Project Management Institute (PMI®) and Agile Alliance ® partnered to create an Agile Practice Guide. This experience report describes the recruitment of 7 core team members, team formation, and development of the new guide from August 2016 to June 2017.

1. Introduction

Our story begins in August of 2016 when seven agile experts met to collaborate on how we would work and deliver the Agile Practice Guide, a partnership between Agile Alliance and Project Management Institute.

2. Background

We are a geographically distributed team, with people all over North America and New Zealand. While many of us know or knew each other, we had to learn to work together as a team. That work included writing, reviewing, retrospecting, and responding to a variety of requests from the partnership.

Mike Griffiths is the chair and Johanna Rothman is the vice-chair for the Agile Practice Guide. Together, we make a great pair. Mike is a cool, calm, and collected person. He chooses his battles. Johanna is still learning to choose her battles. We both advocated for a more agile approach to the work.

Mike is an independent consultant from Canmore, Alberta who works in both the agile and traditional PM communities. He served on the board of Agile Alliance and is a regular contributor to the PMBOK® Guide and other PMI standards. Johanna is an independent consultant from Boston, Massachusetts who works in primarily the agile communities and with people who want to use agile in their work. Johanna was the agileconnection.com technical editor for six years and was the 2009 Agile conference chair.

Our core development team, the writers, worked in iterations for the writing and reviewing. (In addition, we had an extended project team, for guidance.) We did not meet our initial guesses at how much we could do in a two-week iteration. Some of us could only write or review on the weekends. Some people were not available at key points during the project. It looked just like many other agile projects when people have multiple responsibilities.

We were not totally agile. There was an up-front portion, we worked in iterations, and the end is very much a waterfall. For example, we were not able to run the review cycles in a more iterative and incremental way. And, the final stages of book production are very much waterfall.

When we started, PMI and Agile Alliance had defined the scope and deadlines. We negotiated for what we felt we needed in the outline. Each of us has writing experience, so we negotiated for more autonomy in how we worked and the tone of the document. We’ll describe our work in the “What we did” section. We won some and we lost some.

As we worked, we paired to produce the content. We used different approaches: writing as a pair and ping-ponging (one person writes, the other person reviews). We pair-reviewed different sections. In this way, we had four eyes on every section. We weren’t perfect, but we were pretty good.

3. Our Story

PMI and Agile Alliance decided they would create a working group with writers from both organizations to write an Agile Practice Guide. This is that story.

3.1 Our Journey

The Agile Practice Guide project started in August 2016. We had to finish writing and editing by May 2017 to allow the standards and production process time to be completed by early September 2017. The goal was to allow PMI to release the Agile Practice Guide with the PMBOK® Guide – Sixth Edition in Q3-2017.

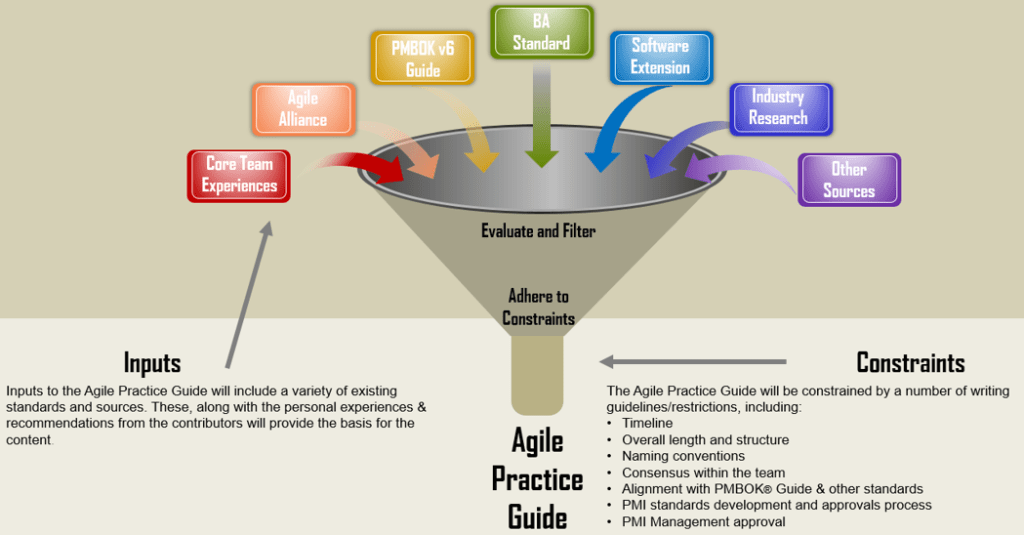

We started with a kick-off meeting in Seattle where we got to meet each other and learn about other members’ backgrounds and the goals of the Agile Practice Guide. In addition to a compressed timeline, our scope and writing also had to conform to a number of constraints to match other PMI standards. These included taking other PMI publications as potential inputs to our guide and aligning with other PMI standards, lexicon of terms and definitions, and the PMI review process.

We created the follow image to help explain these various inputs and constraints to the writing team.

Figure 1. Inputs to and constraints on the Agile Practice Guide

Figure 1. Inputs to and constraints on the Agile Practice Guide

3.2 What We Did

At the first meeting in August 2016, we defined what agile meant to us: mindset, values, and frequent delivery. We had some pushback because people wanted to use the “agile” label in a broader setting. We’ve seen the agile label used when people work in iterations or increments or both. However, they don’t embody the agile mindset or values. One of the values we often see missing with the agile label is that of frequent delivery for feedback. That decision laid good groundwork for us to defend our work later.

At that meeting, we agreed on the outline. We created two-week iterations so we could write and review other teams’ work. In each two-week iteration we created different pairs to write and review. That way we wouldn’t write and review the same part.

3.3 How we worked in iterations and pairs

Each pair had a choice of how they worked. We encouraged people to write as a pair. Some pairs did and some preferred to ping-pong: Writer 1 wrote a piece and Writer 2 reviewed it. They switched for the next piece.

Even as early as the initial writing, not everyone was available to work the same amount. That meant that some people did the bulk of that section’s writing and the other part of the pair reviewed. As long as both people in the pair contributed to the document, we didn’t have rules about how to pair.

For reviewing, we encouraged people to pair-review so they could discuss any concerns in real-time with each other. We encountered trouble almost immediately, particularly given we had a compressed timeline. It took longer than expected to identify and recruit volunteer writers. In addition, two people were not able to be at the August meeting, and one of those later withdrew from the project.

Two weeks after our in-person meeting in Seattle, we asked for another Agile Alliance volunteer. Phil Brock identified a candidate, but after he realized the extent of the commitment, he decided against participating. We were down to seven writers who comprised the core volunteer team.

During our Seattle meeting, we each volunteered for two sections of the Guide. We chose four sections in our first iteration to write and review. We planned to write and review the next four in the following iteration. Because we were down a writer, Mike and Johanna floated as the “missing” person in the pairs during this writing time.

The team made substantial progress on the first draft and met again in person in November. That was the first meeting where the writers met the editor from PMI. The writers checked with the editor regarding the tone of the document and some of the document-specific formatting, such as sidebars, tips, and examples. We thought we knew what was expected of us.

Up until November, we used biweekly calls via Zoom so we could see each other. We had a number of milestones in November so we moved to weekly calls to check on our progress. And, in November, we had a face-to-face meeting so that we could understand more of where we were with respect to finishing the work.

Our weekly workloads varied considerably. Some weeks when we were writing or reviewing sections of the guide the time commitment may have averaged 2-3 hours plus the weekly 1-hour call. However, approaching deadlines or when an urgent call for input was issued, such as: “We need all the diagrams recreating in new forms” this often jumped to 10+ hours a week. Then when we had to review and process 3,000 + review comments in a short period of time, the workload was 20+ hours a week.

In addition to these ongoing commitments, when we met for face-to-face workshops, we typically worked 9 hours a day. It was a lot of time to volunteer and was driven by the need to develop the guide within 9 months, rather than the average 18-month development timeframe.

At the November meeting, we printed all of the guide written to date and walked through it on the table.

Figure 2. The guide laid out on tables for review

Figure 2. The guide laid out on tables for review

First individually and then as a group, we reviewed the entire document. People added stickies to identify gaps, overlaps, and flow issues. We also added a number of general review comments. Based on all the stickies we created a backlog of work items.

In addition to these stickies, as a group we went through the review comments collected in the Google Docs master version of the document creating new backlog items for review comments we could not address immediately. We reviewed this new combined backlog to classify work items as either “Now” for things we wanted to tackle that weekend, or “Later” for things that needed to be done before turning the document in for editing, but did not have to be done right away. A third category of “Review” was used for things that could potentially happen while the document was being reviewed.

Working in teams, the group processed the backlog items. This took about a month, but we got everything done. We acknowledged the overlap being caused by having a content structure for the practice guide that included Team, Project, and Organization sections. As a team, we decided to merge the Team and Project sections together.

We moved to weekly calls after the face-to-face meeting in November. We realized we still had a significant amount of work to complete before our Subject Matter Expert (SME) review in January. The weekly cadence helped us stay focused on the Guide work, even though we all had our regular jobs.

3.4 Different Working Styles

As we had a geographically dispersed team we needed time to learn about people’s preferred communication styles. When we were face to face for our kick-off meeting communications were not a problem, everyone was very happy to talk and share. As part of our team chartering exercise we discussed when and how we would like to work as a group. However, when returning to our regular working locations some different communication preferences emerged.

Some people were happy to reply to emails for updates and work objectives. Others rarely checked their email and instead preferred instant messaging and tools like Trello. This disconnect created some frustration and crossed wires in the first few weeks of the project. Talking to people individually revealed their preferred communication styles. In the end, we used multiple formats, posting updates to the central (PMI) Kavi collaboration site which sends everyone an email with a link to contribute, as well as posting to Trello that notifies participants via their preferred settings.

As with most projects, we found it better to over-communicate, sending messages in multiple formats. People could ignore duplicates and we wanted to ensure we reached everyone. In hindsight, we should not have assumed that the two members missing from the kick-off meeting would want to communicate in the same way the members present had agreed. The lesson to be learned here is just because a group unanimously votes one way does not mean missing members will also.

3.5 A Compressed Timeline

The project was under pressure and time-constrained from the beginning. The PMI standards writing process usually takes 18 – 24 months to complete. Contributing to this duration are several review steps. These include a review of the proposed document outline by the PMI Standards Advisory Group, an extensive review of the first draft by 50-60 Subject Matter Experts followed by an adjudication process for each comment received, a PMI Lexicon group review of terms used, PMI Standards Advisory Group review for alignment with other PMI Guides, etc. Also, because this initiative was a partnership with Agile Alliance there were also Agile Alliance reviews and feedback to process.

In order to meet the delivery goal we had to overlap some functions that resulted in rework. For instance, we started to work on creating content while the content outline (our proposed table of contents) was still being reviewed by the Standards Advisory Group. The advisory group requested several changes to the outline that we then had to accommodate.

We provided an outline we thought was sufficiently detailed from our August meeting. However, the Member Advisory Group (MAG) expected a more in-depth content outline than the team. Our challenge was whether to spend time redrafting the outline versus developing content for the first draft. Karl helped Mike and Johanna to make some changes based on MAG feedback, but we got an agreement from the MAG to forgo a complete revamp of the outline. This allowed the writing team to focus on generating the first draft to meet the accelerated development schedule.

Johanna and Mike shielded the team from some of these back-and-forths. It was difficult enough getting people to write content to our accelerated timeline. Changes to the proposed structure this early in the process were largely irrelevant diversions since the content and structure would change so much as the standard developed anyway. Yet we had to follow the process, acknowledging requests for change. These people were important final approvers and so we had to consider and process each request for change.

3.6 Personal or Professional Voice

However, most of the suggested changes we shared and discussed as a team. The style of writing or “voice” we wanted to use quickly became a big issue. We wanted something conversational like we were talking to the reader, along the lines of “If you struggle getting good feedback at demos, consider running yesterday’s orders to make it seem more realistic.”

However, PMI Standards traditionally have more formal, detached style, such as “When engaging stakeholders in end-of-iteration demonstrations, consider basing data sets on yesterday’s orders to show how the features will be used in real life.” Both are valid writing styles. Some would argue a non-personal tone is more professional and fitting of a standards guide. In many agile teams, we recognize we work with humans, who each work differently. Especially in a geographically distributed part-time team, we recognized that we each needed to flex to accommodate other people’s styles. We wanted to model that desired behaviour and take a friendlier, more personal tone.

Sometimes, we felt like we spent almost as much time talking about passive vs. active voice as we did the content of the Guide. That was a function of Johanna’s belief (and experience) that passive introduces more confusion and active diminishes confusion. We were all looking for clarity of expression.

As the Agile Practice Guide was being commissioned by the PMI Standards Group it should come as no surprise that they had standards for their standards. Casual writing style was not one of the approved standards. Everyone understood why we wanted to use a casual style, but the change went back and forth many times. Initially, PMI suggested that the more casual style should be reserved for sidebar discussions only and regular guidance text should use traditional third-person language. In the end, PMI suggested a chapter review with a volunteer focus group to gather feedback on the layout and style being suggested for the guide.

This independent review revealed nearly unanimous support for a more casual writing style and it was adopted throughout the guide. The team felt this idea for an independent review to settle the writing style issue was a good suggestion by PMI. Obviously, their standards team had good reason to support their standards and the writing team felt limited by dispassionate language. Using a third-party perspective to help determine what the final audience would most likely prefer was a good experiment everyone could buy into.

Another option that was discussed during this process was to reclassify the guide as a “book” rather than a “Guide” developed under the Standards group. A book would not have to follow any of the regular standards for guide development or approval and would be free to use any format or language it likes. This option was discussed at a few meetings and in the end advocates for keeping it as a guide were able to work with the PMI to gain some leeway on the writing style requirements.

3.7 Issues with the Review Process

Early in our writing, the PMI standards specialist asked us for the names of the SME (Subject Matter Expert) reviewers. He wanted 100 names. In his experience, with about 100 names, we might only receive useful feedback from about 20 people. However, we were sure we would receive useful feedback from almost everyone. Since we had to manage all our feedback, we thought 100 reviewers were too many.

In October, Johanna suggested several alternatives to one large beta review. The suggestions included a rolling review, a smaller review team with personal communications, and restricting the beta review to people who had agile experience.

Despite their flexibility on other standard procedures, PMI held firm on their review approach. In retrospect, it made sense, given the insights it generated (see below). However, we ended up with a tremendous amount of feedback. While we found most of the feedback useful, some of the feedback was confusion about typical agile terms as well as language that prompted objections from some reviewers. It’s possible with a smaller number of reviewers or if we had been able to embed references in the text, these reviewers would have found the wording more palatable.

3.8 Overwhelmed by Feedback

Standards produced by PMI are subject to peer review and feedback. The Agile Practice Guide was no exception and an early draft was sent out for review and feedback to over sixty subject matter experts (SME). These SME representatives came from members of the PMI and Agile Alliance, including many Agile luminaries.

We received a much larger number of responses than anyone expected. The PMBOK® Guide – Sixth Edition has recently been through its review process and received about 4,500 comments. Given the smaller scope of our guide, we had an internal poll and estimated we would receive between 1,000 – 1,500 comments. To our surprise, we received over 3,000 review comments.

Mike imported the comments document (a Word doc) into a Google spreadsheet that provided freedom around how to process them. If we had used the normal PMI adjudication system we would not have been able to reorganize or collaborate as easily. In addition, we would not have been able to manage the comments in a reasonable time.

Each comment needed to be reviewed by the author team and classified as:

- Accept – We will make the change as described.

- Accept with Modification – We make the change in spirit but may change the wording to better incorporate other comments.

- Defer to Tampa – Tricky topics we wanted to discuss as a group at our next Face-to-Face meeting in Tampa.

- Defer – Good idea but beyond the scope of this release. We will keep the request for consideration in the next edition.

- Reject – We will reject the suggested change and provide an explanation why.

Not only did we have to do this for each comment, but we had to agree on the decision with our writing partners which required internal voting between the two or three people who reviewed each comment. This was a time-consuming exercise that came right on the heels of a big writing push we had just completed to make the review draft available.

The review comments exposed some raw emotions. Having worked so hard to get the draft ready it was upsetting to many to read many of the derogatory comments. While it is fine and understandable that many people will not agree with all of the content created, there are good and poor ways of stating this view. Comments along the lines of “I disagree with this statement or claim” and “Where is the supporting data to validate this recommendation?” are valid and need concrete actions to address them. Comments along the lines of “This is hippy BS” are less useful, or actionable.

We had a few calls with our core writing team reassuring them the attacks were not personal. This material just brings out the passion in some people and it is a good sign we are evoking some reaction. We are not sure we convinced anyone. The sheer volume of comments overwhelmed us. We spent many long hours managing the comments and changing the draft in response to the comments in addition to people doing their regular jobs

Working through the comments that took a couple of weeks to read seemed a poor reward for the work invested to date. Then we still had to decide what to do with each comment, vote on our decision, and collaborate with peers to develop and incorporate the change. People were mature about it, they understood creating something and presenting it to the world for review exposes yourself and makes you vulnerable. The process was tremendously valuable for the guide and we had a much better product once we had completed it.

3.9 You Can’t Please Everyone (and Should Not Try to)

Due to our SME reviewers being experts in predictive domains and Agile domains, we had polarizing feedback that was often contradictory. The plan-driven, predictive people would make comments like, “You are disparaging predictive approaches, and describing solutions to predictive approaches done wrong, not predictive done right.” The agile SMEs comments were along the lines of, “You need to explain why agile approaches are better and not tolerate half-way house, hybrid approaches.”

Often there is no pleasing both of these sets of people with a discussion on a single topic. The enthusiasts at either end of the project spectrum hold quite different views on the world. However, these experts are not the target audience for the Agile Practice Guide. Instead, our target audience is project practitioners who are looking for help navigating the messy middle ground between seemingly contradictory predictive and agile approaches.

One of the ways we helped shrug off the harsh criticisms was to reason that if we are upsetting the zealots at either end of the spectrum in about equal amounts, then we have probably struck the right balance for most readers. We were not able to accommodate everyone’s comments, to stay on schedule for publication, we had to defer more suggestions than we would have liked to. However, we did read every comment, and thought and discussed how best to incorporate it, then wherever possible updated the document to reflect it.

Every now and then a comment was in praise of something written or so just ridiculous it was funny to read. While the review process seemed overwhelming at the time we are grateful (mostly) for everyone who participated. Your feedback is appreciated and helped us tighten and improve the document.

3.10 Writing and Reviewing Revealed Cultural Differences

It was clear to us as a writing team that we had significant cultural differences—a culture clash—with some of the PMI managers and review team. The writers wanted to use agile approaches in all the writing and reviewing. Some of the PMI people repeatedly said, “That’s not how we’ve done it.” That was true. Given their experience and perspective, perhaps it was naive of us to think we could change their approach. However, we were successful in making many changes such as negotiating the removal of the requirement for detailed annotated outline revisions, writing style, new elements for this guide, and the decision to de-scope work due to time constraints and quality concerns.

In addition, some reviewers thought we wrote some silly things. One of the big questions in the comments was the idea of the “generalizing specialist.” That was a term that seemed like it was an anathema to several reviewers. Mike and Johanna didn’t understand their concerns. We didn’t know what to do with their comments.

3.11 Final Redrafting and More Confusions

During our final face-to-face meeting in Tampa, we redrafted most of the Guide. We realized we had a very large section (4) on “Implementing Agile” and decided to call it 4A and 4B. Some of us thought we would renumber at the very end so we could still work through any remaining comments. However, neither Johanna nor Mike explicitly told the team and one of us renumbered 4B and the further chapters to 5 and so on.

Many of us were confused until we realized what had happened. We sent an email to explain the confusion and continued to finish the remaining writing and comment integration. It was not a big issue, more a nice reminder that what is logical to one person will often seem illogical to another. Had we been located face-to-face or having daily conversations this likely would not have occurred. However, for volunteer work when there is often a lag between efforts these miscommunications are more likely.

3.12 Late Breaking Changes

One of the problems near the end of the project was this: There is a body in PMI that reviews and can reject guides and standards, even after the teams have finished their writing and reviewing. That body is called the MAG (Member Advisory Group).

When we were all done writing and reviewing, we met in Tampa in March to readjust the Guide’s structure and content. We had a number of comments still to address, the “Defer to Tampa” comments. We knew we would address them in some way and edit/rewrite those sections as needed. We ended up restructuring the guide and rewriting significant portions. That meant we needed a final review from both the MAG and the AA board of directors. We needed to know that they would approve the final document.

Mike exported the document each week so the MAG and AA could review our changes. We had initial positive feedback from the AA. However, we didn’t hear much from the MAG. When we thought we were done, several members of the MAG were concerned about the way we originally wrote about traditional project management. They were concerned enough to consider withholding their approval unless the language changed.

Johanna and Stephen Townsend (from PMI) managed to move through almost all the requests. (Johanna thought some of their objections and requests were a bit silly, but she was able to change the wording to accommodate their requests and not alter the meaning of the Guide.) However, there was one request that Johanna could not see how to change. Johanna was stuck.

Mike took a stab at changing the wording in an image. Johanna didn’t like the wording but she could live with it. We managed to address the MAG’s concerns and we were able to finish the Guide.

3.13 Change is Slower Than You Think

We delivered the final draft to the PMI for final copy editing and illustration finalization on May 3, meeting our deadline. During May and June, the PMI editor had questions and concerns about the Guide. They sent those questions and concerns directly to Mike and Johanna because we were the chair and vice-chair.

However, we had written as a team. We made decisions as a team. And, especially important, we reviewed as a team. We (Mike and Johanna) could not perform the final review without team input and approval. We forwarded these private emails to the team and discovered—again—the power of multiple eyes on the artifacts.

The lesson we learned here is that “management,” as in the people responsible for the final deliverable, might want to have a point person as the one responsible person. Although having a responsible person for input to an Agile team makes sense, having one responsible person deciding on behalf of an Agile team does not. Changing culture—especially for us as one agile team—seemed slower and more difficult than we might have imagined.

4. What We Learned

Along the way, we once again experienced that a project with an agile culture can bounce against organizations that have traditions:

We were not a true cross-functional team. We did not have a copyeditor. As a result, we did not have a “useful” and complete first draft.

We did not get feedback as we wrote and reviewed. We discovered mismatched expectations about the document voice after November. That mismatch created many edits.

When PMI asked for hard delivery dates, we ended up delivering the complete deliverable at the last possible moment, instead of being able to deliver something smaller earlier.

We learned a lot about our process, also:

We maintained our writing cadence and ability to pair. However, we did not successfully establish a regular cadence for retrospectives.

We did not have enough agreement on which collaboration tools to use and when. We used all of Trello, Google Docs, Google Sheets, and Zoom. Not everyone continued to use Trello. Instead, we used docs and sheets to better aid our workflow.

We did not demonstrate as we proceeded. Part of this was there was no audience for a demonstration, but we didn’t demonstrate the entire product to ourselves as a team. We were not able to get regular feedback from key stakeholders to see how we were doing as we proceeded. As a result, some of the initial feedback surprised us.

Not everyone could contribute at the same level throughout the project. Collocated dedicated teams don’t have this problem. Distributed teams and people who multitask don’t contribute in the same way. We didn’t have an even distribution of work. We adjusted our workload to accommodate our realities and commitments.

We had to learn how to come to enough of an agreement that we could complete the sections. Some of us are iteration-agile. Some of us are flow-agile. Some use a combination. We each have strongly held opinions. We had to agree on the images and words to be able to complete our work.

The next challenge was corralling a group of agile evangelists to work to a largely waterfall plan and heavily front-loaded production timetable. After much squirming by both groups, we used a hybrid approach for our writing and review that allowed for iterative, incremental development of the first draft of the guide. It also largely satisfied PMI’s production schedule and review gates.

We used limited consensus on much of the Guide. We needed to find wording that we, as a writing team, could live with that addressed review comments. If we, as writers, could live with the current text, that was good enough. We did not have to be happy.

In true agile fashion, what we originally planned and developed in our original outline and document changed and morphed throughout the life of the product being developed based on feedback and stakeholder requirements.

4.1 Lessons Learned from our writing:

Gaining consensus with experts with differing strongly held opinions is never easy. It is even harder when everyone is an unpaid volunteer who is also geographically dispersed and time-shifted. Luckily we quickly established some team norms and cadences that for the most part worked for everyone.

The content and writing styles recommended by the agile authors fundamentally differed from the standards guidelines used by PMI. We wanted to use a direct, personal writing style using language such as “You may want to consider using X…” but this was contrary to the third-person directive style favored by PMI for its standards. This is a reflection of PMI’s background being in project environments that can be defined upfront and have a focus on process. In contrast, agile approaches assume more uncertainty and focus more on the people aspects. The writing team and our PMI counterparts discussed this at great length. As a “book team” (the writers and our PMI colleagues), we all decided to push for more people-oriented language.

Mike’s Personal reflections: I am glad we created this guide. I know agilists will think we did not go far enough and traditionalists will think it is foo-foo nonsense, but that’s one of my measures of success for the guide – addressing the tricky balance between these camps that many practitioners work in day-to-day.

Agile approaches allow teams to deliver in short timeframes, against hard deadlines, and respond to change. Our writing project had all of those characteristics present but we missed multiple deadlines and seemed to struggle on many fronts. I know agile does not allow us to bend the laws of time and space but it feels like we did not adopt all of the principles that we were expounding. It is one thing to build safety within a team so people are happy to share interim results without fear of criticism, but another to release a part-finished product to the community. I think a wiki or evolving public draft would have really helped us.

Johanna’s personal reflections: I’m glad we created the guide. And, I found the writing time challenging. I had started a book about the time the Guide work started. I wrote very little for that book until March. (I finished it in June.) I found some of our work challenging because we were so distributed across many time zones. Am I glad I participated? Yes. Would I do it again? I suspect not. The personal cost to me in time away from my writing and consulting was quite high.

5. Acknowledgements

We thank the other writers on the Guide: Jesse Fewell, Becky Hartman, Betsy Kauffman, Stephen Matola, Horia Slușanschi. We also thank Phil Brock from Agile Alliance and our PMI colleagues: Karl Best, Alicia Burke, Edivandro Conforto, Dave Garrett, Stephen Townsend, Roberta Storer.

We also thank all the SME reviewers who read the entire guide and provided feedback.

We thank our shepherd, Rebecca Wirfs-Brock for her insightful guidance.